What is Data Management?

Simon Burge

Share this content

Data management is the process of gathering, safekeeping, and practical utilisation of information in a cost-effective and efficient manner.

The primary objective of data management is to facilitate individuals, businesses, and interconnected devices in leveraging data in a compliant way to enhance decision-making and actions, resulting in maximum benefits to the organisation.

Given that companies are increasingly using intangible assets to create value, a strong data management strategy has become crucial for optimising resource utilization.

This comprehensive article offers deeper insight into the subject of data management, and sheds light on the various disciplines it encompasses.

It also highlights the most effective practices for managing data, the obstacles organisations typically face, and the advantages that can be gained from implementing a successful data management strategy.

Article Chapters

ToggleData Management Processes

The process of data management encompasses a variety of functions that work together to ensure the information within corporate systems is accurate, easily available, and accessible.

Whilst the bulk of the work is carried out by IT professionals and data management teams, business users are also typically involved in certain aspects of the process to ensure the data meets their requirements, ensuring compliance with policies governing its use.

The field of data management encompasses a broad range of factors, including but not limited to:

- Creating, accessing, and updating data across various tiers of data

- Storing data across both on-premises and multiple cloud platforms

- Ensuring high availability and disaster recovery of data

- Utilizing data in an ever-expanding range of applications, analytics, and algorithms

- Safeguarding data privacy and security

- Archiving and securely disposing of data in accordance with retention schedules and compliance regulations.

Why is Data Management Important?

Data is becoming increasingly recognised as a valuable corporate asset that can aid in making better-informed business decisions, optimising operations, improving marketing campaigns, reducing costs, and ultimately increasing revenue and profits.

However, poor data management practices can result in incompatible data silos, inconsistent data sets, and data quality issues.

This can limit an organisation’s ability to leverage business intelligence and analytics applications, and even lead to erroneous conclusions.

Effective data management has become even more critical in recent years due to the growing number of regulatory compliance requirements.

This includes data privacy and protection laws such as GDPR and the California Consumer Privacy Act (CCPA).

Furthermore, companies are collecting larger volumes and a wider range of data types, which are characteristic features of the big data systems many organisations have adopted.

Without proper data management, such environments can quickly become cumbersome and difficult to navigate.

How Modern Data Management Systems Work

Modern organisations require an efficient data management solution that can effectively handle data management tasks across a diverse yet unified data tier.

This is accomplished through the use of data management platforms, which may include:

- databases

- data lakes

- data warehouses

- big data management systems

- data analytics tools

Together, these components function as a “data utility” to provide the necessary data management capabilities for an organisation’s applications, as well as the analytics and algorithms that utilise the data generated by the software.

While current tools have made significant strides in automating traditional management tasks for database administrators (DBAs), the sheer size and complexity of most database deployments often still require some degree of manual intervention, which can increase the likelihood of errors.

To mitigate this risk, the development of new data management technology, specifically the autonomous database, aims to minimise the need for manual data management tasks.

By doing so, it helps reduce errors and improve the overall efficiency and effectiveness of data management processes.

The Role of Continuous Integration

Continuous Integration (CI) is the most critical step for achieving continuous software delivery.

It’s a practice where developers commit small and incremental code changes to a centralised source repository that initiates a series of automated builds and tests.

This repository allows developers to catch bugs early and automatically, preventing them from being passed onto production.

A typical continuous integration pipeline involves several steps, starting from code commit, then progressing to automated linting/static analysis, dependency capture, and basic unit testing, before creating a build artifact.

Source code management systems such as Github or Gitlab offer webhook integration with CI tools like Jenkins to run automated builds and tests after every code check-in.

A data management platform serves as the foundational system for collecting and analysing large volumes of data across an organisation.

Commercial data platforms typically come equipped with software tools for management, developed by the database vendor or third-party vendors.

These data management solutions assist IT teams and DBAs in performing typical tasks such as:

- identifying, alerting, diagnosing, and fixing faults in the database system or primary infrastructure

- assinging database memory and storage resources

- applying changes to database design

- optimising database query responses for faster application performance.

Cloud-based database platforms have become increasingly popular, allowing businesses to cost-effectively scale upwards or downwards quickly.

Some platforms are available as a service, providing organisations with even greater savings.

What is an Autonomous Database?

An autonomous database is a cloud-based database that uses artificial intelligence (AI) machine learning algorithms and automation to perform various tasks such as:

- performance tuning

- security patching

- backups

It’s designed to eliminate the need for manual database management tasks traditionally performed by database administrators (DBAs), allowing them to focus on more strategic activities.

An autonomous database can automatically adjust its resources to meet the needs of changing workloads and provide high availability and scalability.

It also provides advanced security features to protect against cyber threats and ensures regulatory compliance.

The major cloud providers, such as Oracle, Amazon, and Microsoft for example, offer autonomous database services to their customers.

Types of Data Management Functions

The data management process comprises various disciplines that involve multiple steps, ranging from data processing and data storage, to governance of data formatting and utilisation in operational and analytical systems.

Establishing a data architecture is often the first step, especially in large organisations with significant amounts of data to manage.

A data architecture provides a framework for managing data and deploying databases and other data platforms, including specific technologies to suit individual applications.

Databases are the primary platform used to store corporate data.

They consist of a collection of data that is organised to facilitate access, update, and management.

They are used in both transaction processing systems that generate operational data like customer records and sales orders, and data warehouses that store consolidated data sets from business systems for business intelligence (BI) and analytics.

As a result, database administration is a critical data management function.

After databases have been set up, performance monitoring and tuning are required to maintain acceptable response times on database queries that users run to retrieve information from the data stored in them.

Other administrative tasks include database design, configuration, installation and updates, data security, database backup and recovery, and application of software upgrades and security patches.

A database management system (DBMS) is the primary technology used to deploy and manage databases.

DBMS software acts as an interface between databases and their users, including database administrators (DBAs), end users, and applications that access them.

File systems and cloud object storage services are alternative data platforms to databases, but they store data in less structured ways than databases, offering greater flexibility on the types of data that can be stored and how the data is formatted.

However, these platforms may not be suitable for transactional applications.

There are several essential data management practices, including:

Data Modeling

This involves creating diagrams that depict the relationships between data elements and how data flows through systems.

Data Integration

This is the process of combining data from different sources for operational and analytical purposes.

Data Governance

This sets policies and procedures to ensure data consistency throughout an organisation.

Data Quality Management

This focuses on correcting data errors and inconsistencies.

Master Data Management (MDM)

This establishes a standardised set of reference data on entities such as customers and products.

Typical Data Management Tools

There’s various options available for managing data using a wide range of technologies, tools, and techniques.

The following are some examples of these options for different aspects of data management:

Database Management Systems

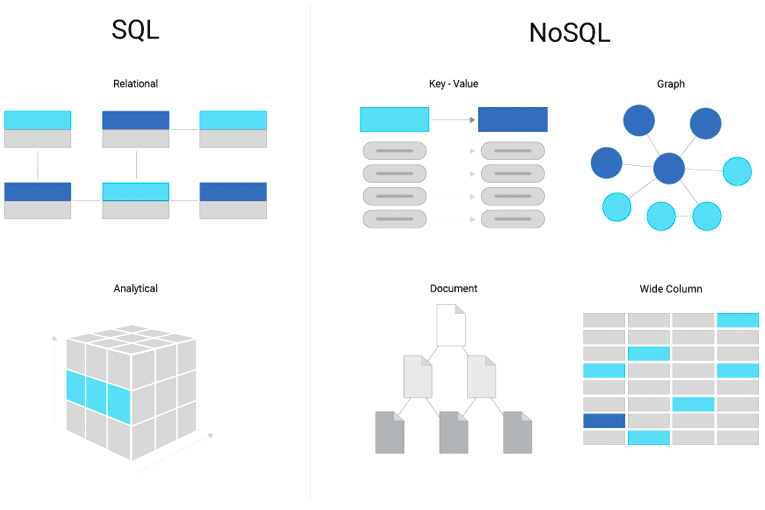

The relational database management system is the most commonly used type of DBMS.

These systems organise data into tables with rows and columns that contain database records, and related records in different tables can be connected through the use of primary and foreign keys.

This eliminates the need for duplicate data entries.

Relational databases are optimised for structured transaction data and are built around the SQL programming language and a rigid data model.

Their support for the ACID transaction properties – atomicity, consistency, isolation, and durability – make them the top choice for transaction processing applications.

However, there are now other viable options for different data workloads.

Most of these options are categorized as NoSQL databases, which do not impose rigid requirements on data models and database schemas.

As a result, they can store unstructured and semi-structured data, such as sensor data, internet clickstream records, and network, server, and application logs.

The four main types of NoSQL systems are:

- document databases which store data elements in structures similar to documents.

- key-value databases which store unique keys and associated values.

- wide-column stores that use tables with a large number of columns.

- graph databases which connect related data elements in a graph format.

Although NoSQL databases do not use SQL, many now incorporate SQL elements and provide some level of ACID compliance.

Other database options include in-memory databases that accelerate I/O performance by storing data in server memory rather than on disk, and columnar databases designed for analytics applications.

Hierarchical databases, which predate relational and NoSQL systems and run on mainframes, are still in use.

Users can deploy databases on-premises or in the cloud, and some database vendors offer managed cloud database services, taking care of database deployment, configuration, and administration for users.

Data Integration

Extract, transform, and load (ETL) is the most commonly used data integration technique.

It involves extracting data from source systems, converting it into a consistent format, and loading the integrated data into a data warehouse or another target system.

However, modern data integration platforms now offer various other integration methods.

One such method is extract, load, and transform (ELT), which is a variation of ETL that loads data into the target platform in its original form. ELT is often used for data integration in big data systems and data lakes.

ETL and ELT are integration methods that operate in batch mode and are executed at fixed intervals.

In contrast, real-time data integration allows data management teams to apply changes to databases as they occur.

Two approaches are used for real-time data integration: change data capture, which forwards changes made to the data in databases to a data warehouse or other target system, and streaming data integration, which integrates streams of real-time data in real time.

Another option is data virtualization, which generates a virtual view of data from various systems for end-users without the need to physically load the data into a data warehouse.

Big Data Management

NoSQL databases, despite their name, often include SQL elements and offer some degree of ACID compliance.

Additionally, there are other database options such as in-memory databases that store data in server memory instead of on disk to improve I/O performance and columnar databases that are optimised for analytics applications.

Hierarchical databases, which predate both relational and NoSQL systems and run on mainframes, are still available for use.

Databases can be deployed either on-premises or in the cloud, and managed cloud database services are provided by some database vendors for users who prefer them to handle deployment, configuration, and administration.

Data Warehouses & Data Lakes

The two most commonly used data repositories for managing analytics data are data warehouses and data lakes.

Data warehouses are the more traditional approach, typically based on relational or columnar databases.

They store structured data that has been extracted from different operational systems and prepared for analysis.

Business intelligence querying and enterprise reporting are the primary use cases for data warehouses, enabling business analysts and executives to analyse sales, inventory management, and other key performance indicators.

An enterprise data warehouse integrates data from business systems across an organisation.

In large companies, individual subsidiaries and business units with management autonomy may build their own data warehouses.

Data marts, which contain subsets of an organisation’s data for specific departments or user groups, are another warehousing option.

In one approach, existing data warehouses are used to create different data marts.

While in another approach, the data marts are built first and then used to populate a data warehouse.

Data lakes serve as repositories for large amounts of data that are utilised for predictive modeling, machine learning, and other advanced analytics applications.

They were initially constructed on Hadoop clusters but now increasingly use S3 and other cloud object storage services.

Data lakes may also be deployed on NoSQL databases, and different platforms can be used in a distributed data lake environment.

While data can be processed for analysis during ingestion, a data lake typically contains raw data that data scientists and other analysts need to prepare for specific analytical uses.

A new option for storing and processing analytical data has emerged called the data lakehouse.

It combines features of data lakes and data warehouses by fusing the flexible data storage, scalability, and lower cost of a data lake with the querying capabilities and more rigorous data management structure of a data warehouse.

Data Modeling

Data modelers use a range of techniques to develop conceptual, logical, and physical data models that capture data sets and workflows in a visual format, and align them with business requirements for transaction processing and analytics.

Entity relationship diagrams, data mappings, and schemas are among the common modeling techniques used.

Data models need to be updated when new data sources are added or when an organisation’s information requirements evolve.

Data Governance, Quality & MDM

Data governance is a process primarily driven by the organisation.

Although there are software products that can assist with managing data governance programs, they are not essential.

Data governance programs typically involve a data governance council made up of business executives who collectively make decisions on common data definitions and corporate standards for creating, formatting, and utilizing data.

Data management professionals may oversee governance programs, but they typically do not make the final decisions.

Data stewardship is an important part of governance programs, as it involves managing data sets and ensuring that end users adhere to approved data policies.

Depending on the size of an organisation and the extent of its governance program, the role of a data steward may be full or part-time.

Data stewards can come from either the business operations or the IT department, but they must have a thorough understanding of the data they are responsible for managing.

Data governance is strongly linked to the improvement of data quality.

Ensuring that data is of high quality is an essential component of effective data governance.

Metrics that track improvements in the quality of an organisation’s data are critical to demonstrating the business benefits of governance programs.

Different software tools support various key data quality techniques, including the following:

- Data profiling: involves scanning data sets to identify any outlier values that might be errors.

- Data cleansing (or scrubbing): involves fixing data errors by modifying or deleting bad data.

- Data validation: checks data against preset quality rules to ensure its accuracy and consistency.

Master data management (MDM) is closely linked to data governance and data quality management, although its adoption has been less widespread than the other two.

This is partly due to the complexity of MDM programs, which are mostly limited to larger organisations.

MDM involves creating a central repository of master data for selected data domains, often referred to as a golden record.

The MDM hub stores the master data, which is then used by analytical systems for consistent enterprise reporting and analysis.

The hub can also push updated master data back to source systems, if required.

Data observability is a developing practice that can enhance data quality and data governance programs by offering a comprehensive view of data health within an organisation.

Based on observability techniques used in IT systems, data observability tracks data pipelines and datasets, detecting any problems that require attention.

Data observability solutions can automate monitoring, alerting, and root cause analysis processes, as well as assist in planning and prioritizing problem-solving efforts.

Data Management Difficulties

The speed of business and the growth in the amount of data available are the primary causes of data management challenges today.

As the variety, velocity, and volume of data continue to increase, organisations are compelled to find more efficient management tools to keep up.

Some of the significant difficulties that organisations face include the following:

Lack of Data Insight

With data being collected and stored from an ever-increasing number and variety of sources such as sensors, smart devices, social media, and video cameras, it’s crucial for organisations to know what data they have, where it’s located, and how to utilise it effectively.

To extract meaningful insights in a timely manner, data management solutions must have the scale and performance capabilities to handle the vast amount of data.

Maintaining Data Management Performance

As organisations continue to collect, store, and utilise more data, they must ensure their database performance remains optimal.

This requires continuous monitoring of the types of queries being executed and making necessary changes to indexes to accommodate evolving queries, all without compromising performance.

Regulatory Compliance

Compliance regulations are dynamic, complicated, and often differ between jurisdictions.

As a result, organisations need to be able to quickly and easily review their data to identify anything that falls under new or modified requirements.

Specifically, it’s important to detect, track, and monitor personally identifiable information (PII) to comply with increasingly stringent global privacy regulations.

Processing & Converting Data

Simply collecting and identifying data is not enough to derive value from it.

The data must also be processed.

If the process of transforming the data into a format suitable for analysis is time-consuming and requires significant effort, organisations may be unable to analyze it effectively, resulting in the loss of valuable insights.

Effective Data Storage

Organisations store data in various systems, including data warehouses and unstructured data lakes that store data in any format in a single repository.

However, just collecting data is not enough as data scientists need to transform data from its original format into the shape, format, or model that they need for analysis.

Without a way to easily and quickly transform data, the potential value of the data is lost.

IT Agility

Organisations now have the option to choose between on-premises environments, cloud-based data management systems, or a hybrid of the two for storing and analyzing their data.

To maintain maximum IT agility and lower costs, IT organisations should evaluate the level of consistency between their on-premises and cloud environments.

Data Management Best Practices

To overcome the data management challenges, organisations need to adopt a comprehensive set of best practices.

These practices may differ based on the industry and the type of data but they are crucial in addressing the current data management challenges.

Some of the best practices are listed below:

Discovery Layers

By providing a discovery layer on top of an organisation’s data tier, analysts and data scientists can easily search and browse through datasets, making the data more usable.

Data Science Environment

Automating the data transformation work as much as possible is essential in a data science environment.

It can streamline the process of creating and evaluating data models.

By using a set of tools that eliminate the need for manual data transformation, analysts and data scientists can accelerate the hypothesis testing and model development process.

Autonomous Technology

Autonomous data capabilities leverage artificial intelligence and machine learning to continually monitor database queries and automatically optimise indexes as the queries evolve.

By doing so, the database can maintain optimal response times and eliminate the need for DBAs and data scientists to perform time-consuming manual tasks.

Data Discovery

Cutting-edge data management tools utilise data discovery to review data and detect the chains of connection that must be identified, tracked, and monitored for compliance across multiple jurisdictions.

As compliance regulations continue to evolve on a global scale, this functionality will become increasingly critical for risk and security officers.

Converged Databases

A converged database is a comprehensive database system that integrates native support for various modern data types and the latest development models into a single product.

The most advanced converged databases are capable of running a wide range of workloads, including those involving graph, Internet of Things (IoT), blockchain, and machine learning.

Scalability

The ultimate objective of consolidating data is to enable effective analysis that leads to better decision-making.

A highly scalable and performant database platform empowers organisations to rapidly analyze data from diverse sources using advanced analytics and machine learning, thus enhancing their ability to make informed business decisions.

Common Query Layers

New advancements in technology are facilitating seamless integration between data management repositories, resulting in the elimination of differences between them.

A unified query layer that encompasses various types of data storage enables analysts, data scientists, and applications to access data without the need to understand its storage location or manually convert it into a usable format.

Benefits of Data Management

Organisations can reap many benefits from a competently executed data management strategy, including:

Competitive Advantage

A properly implemented data management strategy can provide businesses with potential advantages over their competitors by enhancing operational efficiency and facilitating informed decision-making.

Adaptability

Companies that effectively manage their data can enhance their agility, enabling them to identify market trends and capitalize on new business opportunities faster.

Risk Avoidance

Efficient data management can aid companies in avoiding data breaches, missteps in data collection, and other security and privacy issues related to data, which could negatively impact their reputation, result in unforeseen expenses, and subject them to legal risks.

Improved Performance

In the end, a robust data management strategy can enhance business performance by supporting the improvement of business strategies and processes.